Using var speechRecognizer = new SpeechRecognizer(speechConfig, audioConfig) Ĭonsole.WriteLine("Speak into your microphone.") Using var audioConfig = AudioConfig.FromDefaultMicrophoneInput() using System Īsync static Task FromMic(SpeechConfig speechConfig) Then initialize SpeechRecognizer by passing audioConfig and speechConfig. To recognize speech by using your device microphone, create an AudioConfig instance by using FromDefaultMicrophoneInput(). Regardless of whether you're performing speech recognition, speech synthesis, translation, or intent recognition, you'll always create a configuration. With an authorization token: pass in an authorization token and the associated region/location.A key or authorization token is optional. With an endpoint: pass in a Speech service endpoint.You can initialize SpeechConfig in a few other ways: Var speechConfig = SpeechConfig.FromSubscription("YourSpeechKey", "YourSpeechRegion")

For more information, see Create a new Azure Cognitive Services resource.

Create a Speech resource on the Azure portal. This class includes information about your subscription, like your key and associated location/region, endpoint, host, or authorization token.Ĭreate a SpeechConfig instance by using your key and location/region. To call the Speech service by using the Speech SDK, you need to create a SpeechConfig instance.

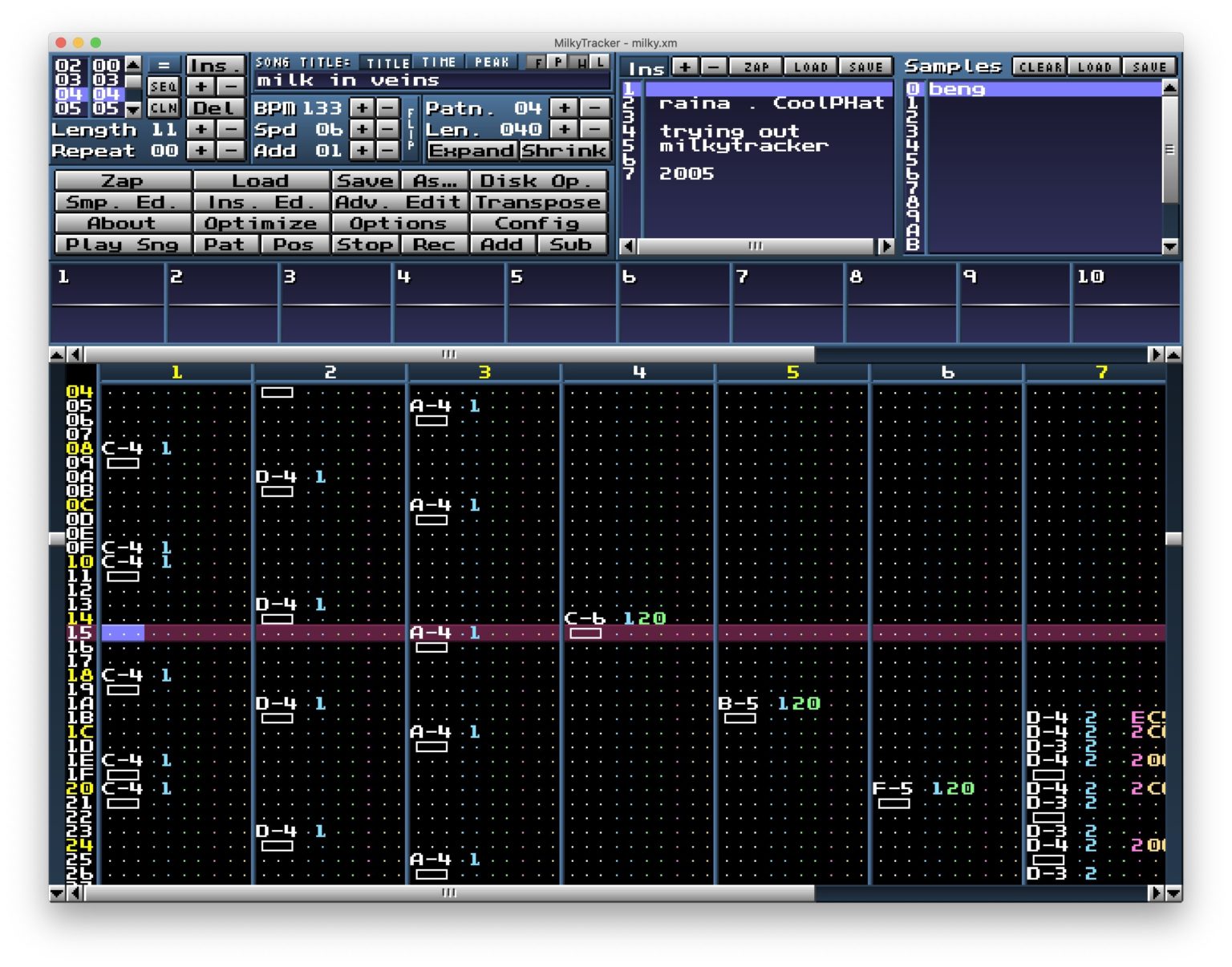

#Stop continuous note milkytracker how to

In this how-to guide, you learn how to recognize and transcribe speech to text in real-time. Reference documentation | Package (NuGet) | Additional Samples on GitHub

0 kommentar(er)

0 kommentar(er)